Navigating the Nuance: Ingredient checkers for your food and cosmetics

“Read the ingredients panel” is the catchphrase of many influencers and beauty blogs in this modern age of information overload. Never has checking ingredients on your cosmetics or food been so easy! The internet is awash with downloadables, databases and apps for checking product details, including ratings of “safety”. In this article, I break down how these resources should work, and how they stack up!

How should these ingredient resources work?

Let’s use baking a cake as an example - as I combine the ingredients in a bowl, I ask you to check if the oven is hot. What does that mean? In this context, “hot” is vague and non-specific. Someone with a little cooking experience might know that a “hot” oven is considered to be between 200 and 230* Celsius, but it’s still poorly defined. Beyond this, the answer to the question could easily be misunderstood and misapplied, depending on the context.

Think of these ingredient apps in the same way - they are asking the equivalent of “is the oven hot” about cosmetics or food by asking “is this toxic” or “is this safe”. But in this case, it’s far more complicated - because there are many variables that contribute to answering the question.

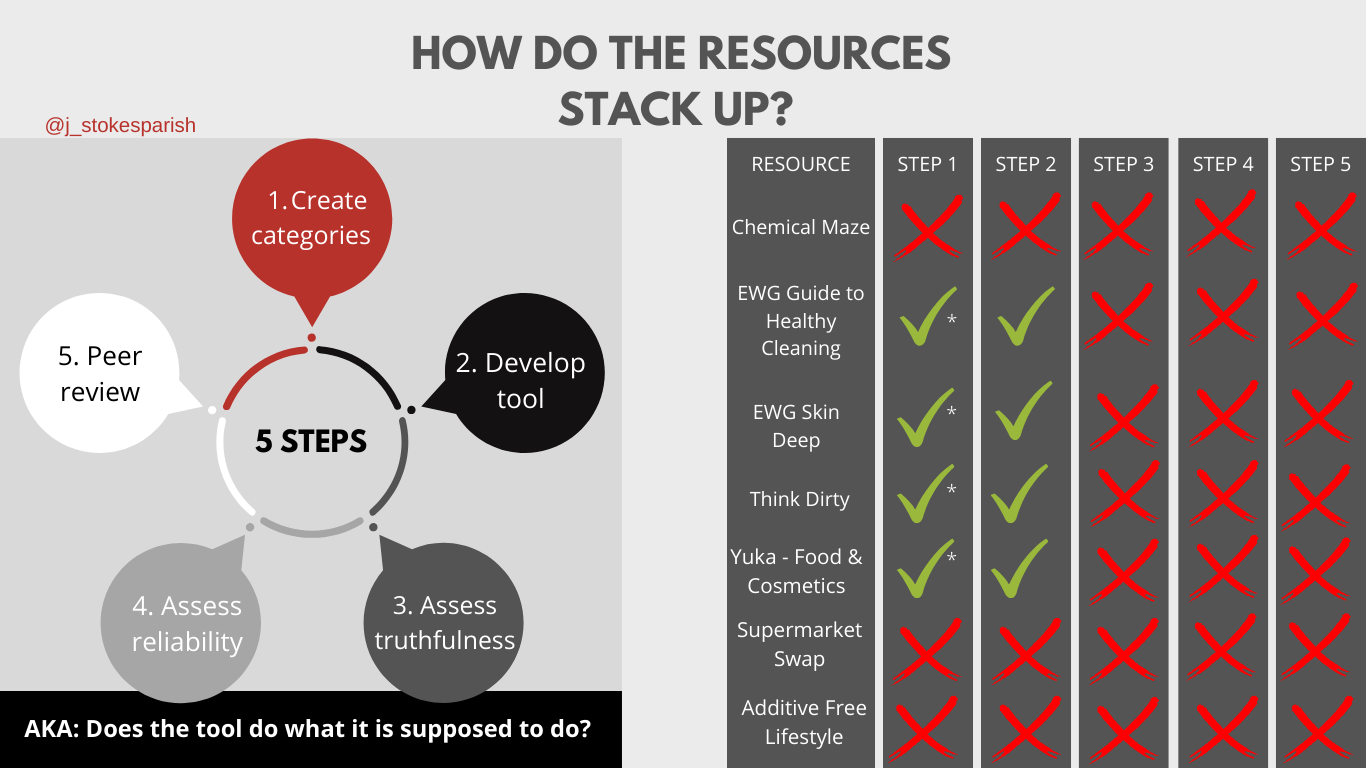

To be able to answer the question “is the oven hot”, the resource should include the following 5 steps:

Clearly define the category/rating (Step 1)

e.g..: A hot oven is defined as 200-230 degrees celsius

e.g.: The criteria to meet this category is clearly defined: use of an oven, collected using a cooking-grade empirical thermometer, measured for a period of 10 minutes

Create the tool (Step 2)

E.g.: this might include creating a traffic light system (green, orange, red), creating a rating system “good, bad, caution”

Testing to make sure the tool can distinguish between good/bad and across different users and different settings (Steps 3 & 4: “assess truthfulness and reliability”)

e.g.: We now apply this criteria to different brands of oven, different types of oven (gas vs electric), and with different foods inside, and use statistical analyses to assess reliability/consistency and more

Publish the detail of Steps 1, 2, 3 & 4 for scientific critique (Step 5)

e.g. : Publish each of these experiments in detail

(Cook et al, 2006; Pearson et al, 2010; Rickards et al, 2012; Streiner et al, 2014; Yong et al, 2013)

In case your eyes are glazing over at this point, here’s the TLDR: 5 steps should be taken to define a category and test that it’s reliable over time and under different circumstances.

Note: At this point it’s also really crucial to point out that there’s a difference between hazard and risk. The difference between these two is this: a hazard is something that can be a problem, while a risk is the likelihood that the hazard might be a problem. This is important to remember later ;)

So, how do they stack up?

Methods:

For those wanting to know how I assessed the resources in detail, read here. For those of you who just want the outcome, skip on by! I assessed whether the steps above were taken to popular resources on the market (as sent in by readers). To do this, I searched the publicly available information regarding the resource, and observed samples provided. Using the criteria above, I assessed whether there was a description of the steps required for reliability. Note, I did not include assessment of the resource authors/founders and their interests, as I wanted to keep the focus on the resources, as opposed to individuals. In essence, I tried to determine:

Was there transparency of the methods used to create the categories?

Was there delineation between types of categories?

Was there available testing of the categories?

Was it published in any scientific literature?

While in most cases, the creators have attempted to define categories (Step 1), this was in varying levels of detail and rigour. For example, the Yuka tool for food uses a numeric rating that combines a nutritional rating (the Nutriscore) with “the presence of additives” and whether the product is organic (Yuka, 2024). Nutriscore is a scientific tool, however the presence of additives and the organic status is arbitrarily set by the founders. They also change their rating approach for liquids versus solids, because “their glycemic indices are higher”. Importantly, Yuka provides no detail on who came up with this rating system, or whether it’s reliable. In an older example, Chemical Maze, a book created by an Australian homeopath, provides no public detail regarding how they came up with the categories and rating system, but has some smiley face graphics to tell you whether it’s good or bad (Chemical Maze, 2011). Along the same vein, Supermarket Swap provides no detail regarding categories, and the only reference to research on their website indicates it was part of a project by “qualified health professionals and a food scientist to research additives and preservatives used in Australia” (Supermarket Swap, 2024). Additive-Free Lifestyle is much the same, and uses similar smiley face graphics to Bill Statham’s Chemical Maze.

In the guides that do make some attempt at creating a rating scale, (EWG, ThinkDirty, and Yuka) they do not delineate between hazard and risk. For example, EWG provides significant levels of detail in their description of methodology, but they make recommendations for “risk” from information that is about hazard (EWG, 2024). This is common, as hazard and risk are frequently interchanged; they are related, but they are not the same. Similarly, ThinkDirty says that they create their risk categories from information regarding health information - that information is about hazard, not risk.

Overall, these resources do not meet the usual standard of science for assessment tools; they probably do not provide you with reliable indicators of “safe” or “not safe” ingredients.

As I scanned the internet, health and beauty articles were full of recommendations and (positive) analyses of these resources. There was one thing clear to me - they obfuscate the actual evidence with science-sounding language, and shift the onus to the individual to “empower” health. All of this ignores the socioeconomic determinants of health, such as access to clean water, air, and education (the core of that is financial status). TLDR? Basically, financial security means most of your health concerns will be markedly reduced. That might be a conversation for another day.

Singing off,

JSP x

P.s. if anyone is interested in collaborating on a more scientific content analysis (aka for publication) of these kind of resources, hit me up…!

References:

Chemical Maze website, 2011. Accessed 7/4/2024.

Cook, D.A. and T.J. Beckman, Current concepts in validity and reliability for psychometric instruments: theory and application. The American journal of medicine, 2006. 119(2): p. 166. e7-166. e16.

EWG wesbite, 2024. Accessed 7/4/2024.

Pearson, R.H. and D.J. Mundform, Recommended sample size for conducting exploratory factor analysis on dichotomous data. Journal of Modern Applied Statistical Methods, 2010. 9(2): p. 5. 158.

Rickards, G., C. Magee, and J. Anthony R. Artino You Can't Fix by Analysis What You've Spoiled by Design: Developing Survey Instruments and Collecting Validity Evidence. Journal of Graduate Medical Education, 2012. 4(4): p. 407-410. 159.

Streiner, D.L. and J. Kottner. Recommendations for reporting the results of studies of instrument and scale development and testing. 2014.

Supermarket Swap Website, 2024. Accessed 7/4/2024

ThinkDirty Website, 2024. Accessed 7/4/2024

Yong, A.G. and S. Pearce, A beginner’s guide to factor analysis: Focusing on exploratory factor analysis. Tutorials in quantitative methods for psychology, 2013. 9(2): p. 79-94. 157.

Yuka, 2024. Accessed 7/4/2024